Search and curate data

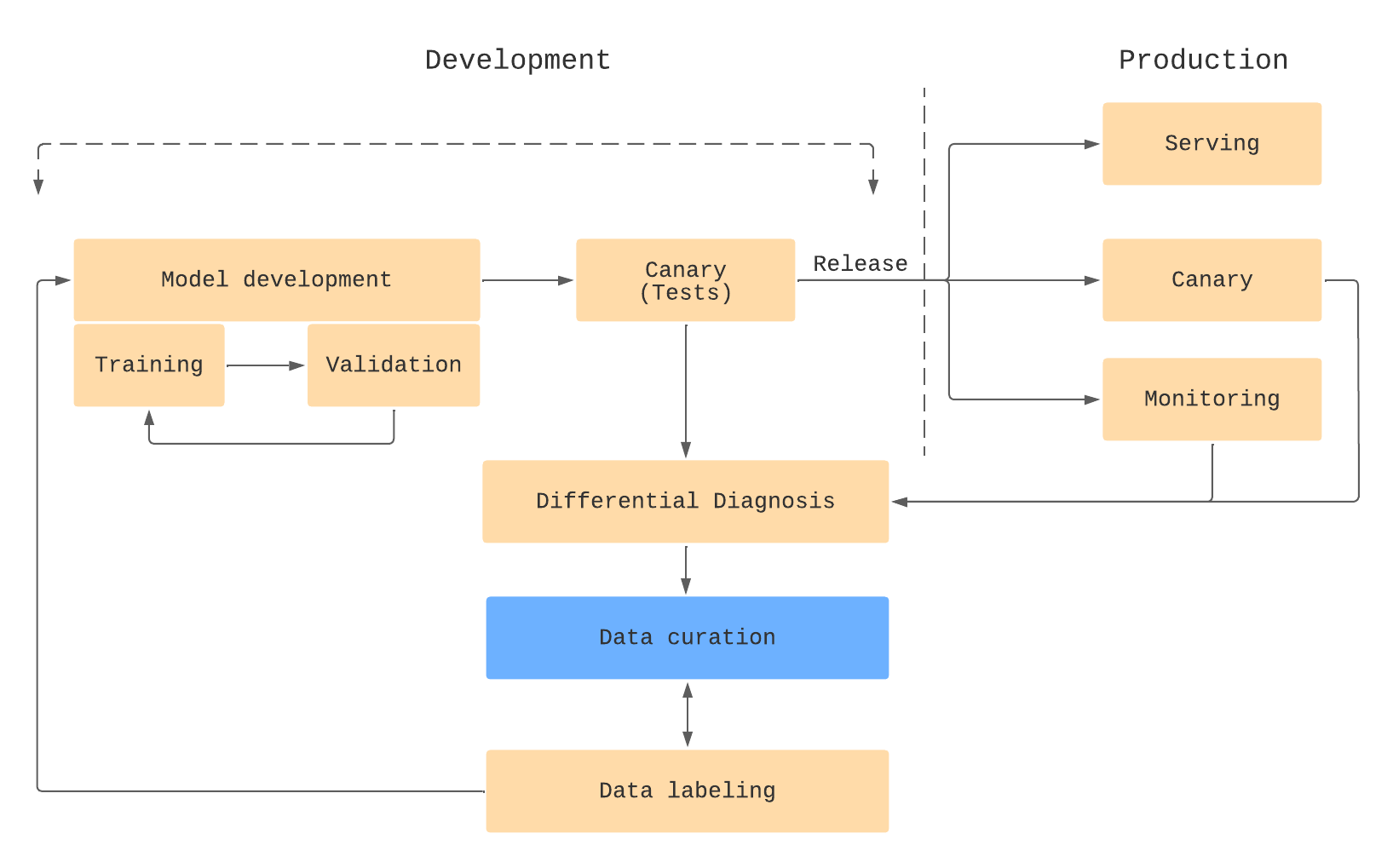

Data curation is an essential step in building modern production AI systems. Data curation is the act of prioritizing data to label or re-label (including improving data quality) to maximally increase the model performance per iteration.

It is not efficient to label your entire dataset in one attempt.

Instead, Labelbox recommends creating training data in iteratively. At each iteration, you can exploit various insights to prioritize the data that will maximally increase model performance. Here are some best practices for iteratively creating training data.

- You can start by labeling a small fraction of the dataset, train a model, and understand where your model is doing poorly or is not confident. Then, leverage your insights to prioritize what to label next, and repeat.

- You can surface edge cases that are challenging to humans and label more of these in priority.

- You can gain insights into which data is and is not redundant and prioritize labeling non-redundant data.

- You can discover that some labeling instructions were unclear and adjust labeling instructions along the way.

- You can discover that your ontology needs adjustments and adjust it along the way.

By labeling in iterations, you will develop better ML models faster while keeping human labeling costs at a minimum.

The insights to select and prioritize data be gained in numerous ways. Below are a few examples of how machine learning teams will gain insights that help them select and prioritize data for labeling.

Human insights

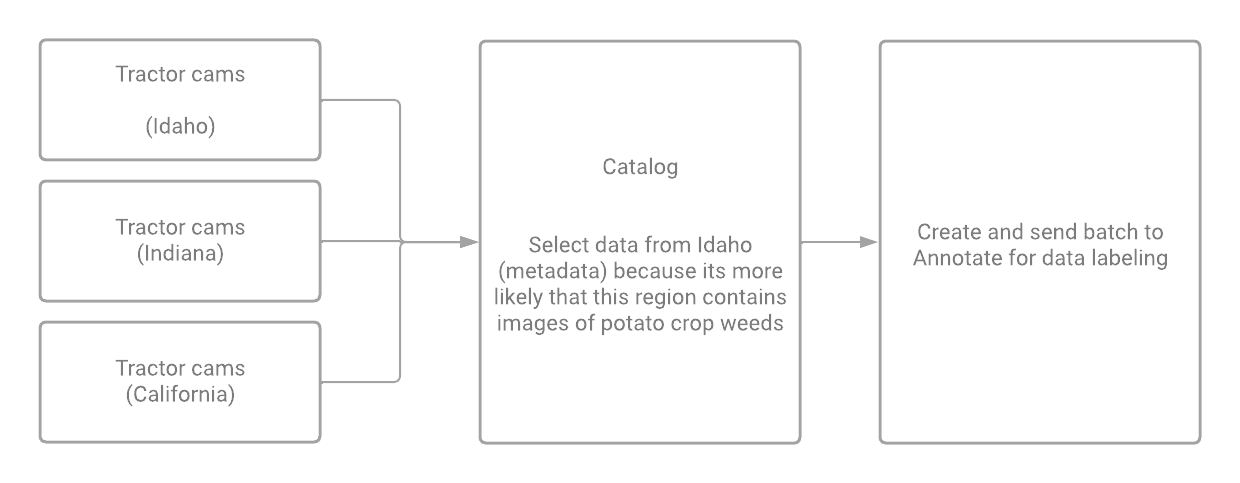

During AI development, AI teams often have insights into the nature of the problem. Below is an example of a data selection driven by human insight.

A company is using AI to develop a crop weed detection system. To accomplish this, they are collecting data from sensors across the country. As they collect data, they observe that the crop weeds are highly diverse and region-specific. Instead of developing a single model that works across the country, the team chooses to focus on a specific crop type (e.g. potatoes). To gather data for this crop-specific model, the company uses Catalog to search and filter data that will likely have a higher density of positive examples of potato crop weeds (region, season time, tractor model type).

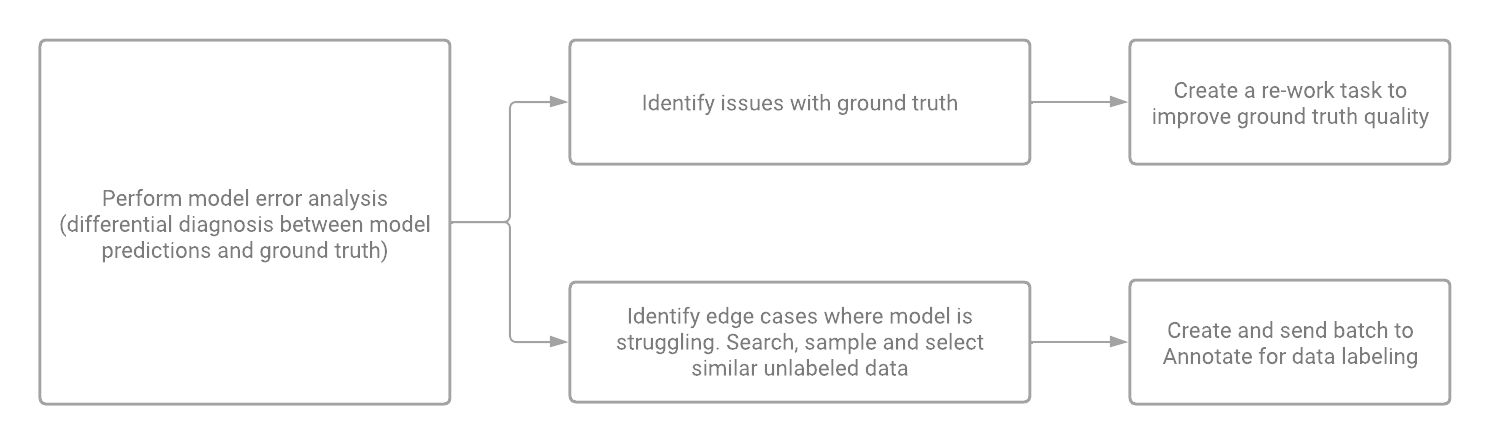

Model performance-based insights

During the iterative cycle of AI development, AI teams may ask themselves the following questions:

-

What unlabeled data should I label in priority, in order to improve model performance?

-

What existing ground truth labels should I send for re-work in priority, in order to improve model performance?

Performing robust model error analysis leads AI teams to perform the following actions.

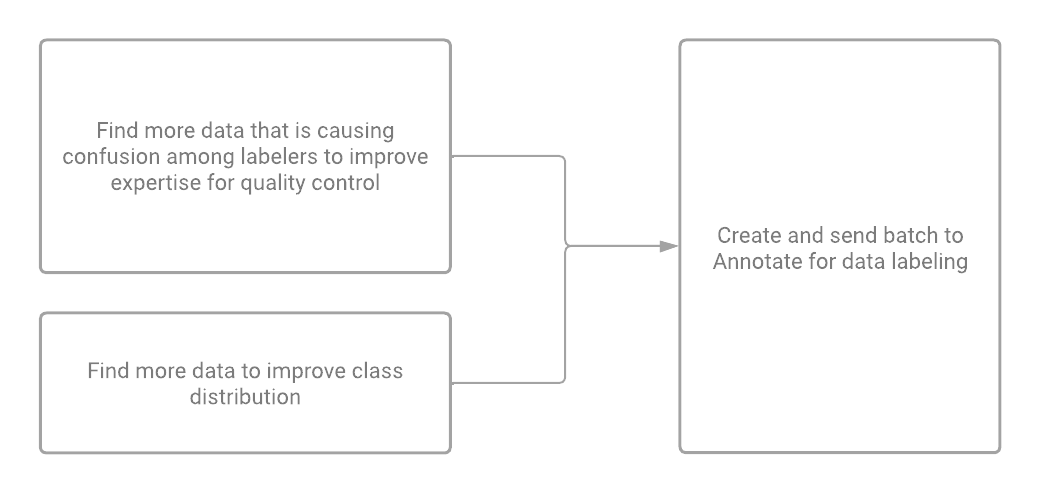

Insights derived during data labeling

During data labeling, you may uncover hard or confusing examples (e.g. a certain kind of manufacturing defect) that may cause your labeling team to misclassify the example. When this happens, it is best practice to find more examples like that in your unlabeled data and prioritize those data rows for labeling. Doing so can improve expertise (familiarity) among the data labelers.

Another best practice is to improve class distribution by purposefully selecting and prioritizing data that is likely to contain rarer classes.

For both of these situations, you can use the Catalog to send prioritized data rows to the labeling queue.

Updated 5 months ago