Prioritize high value data to label (active learning)

How to use uncertainty sampling to perform active learning.

Machine learning teams tend to have a lot of unlabeled data; they typically cannot label all of it. Therefore, Labelbox recommends choosing wisely the data that you decide to label.

Machine learning teams usually end up labeling more data than they need. However, not all data impacts model performance equally. Labelbox recommends aiming to label the right data—not just more data. Many times ML teams can achieve the same model performance, on a smaller labeling budget.

Active learning is a practice where, given a trained model, you identify which data would be most useful to label next in order to be most efficient in improving your model performance.

Labelbox is an intuitive launchpad for data-centric AI development. As you go through training iterations, Labelbox helps you identify high-value unlabeled data rows that will optimally boost model performance. You should prioritize labeling these data rows first, re-train the model on the new dataset, and repeat.

Uncertainty sampling (active learning)

Uncertainty sampling is the practice of getting human feedback when a model is uncertain in its predictions. The intention behind uncertainty sampling is to understand which data rows your model is likely to be less confident predicting and to target those data rows in your next labeling project. If the model is uncertain on some data rows, then getting humans to label similar data rows will likely help the model improve its predictions.

How to do uncertainty sampling in Labelbox

- First, create a model and model run to host unlabeled data rows. Currently, this can only be done via the SDK. Support in the UI is coming soon.

# Create a Model and Model Run

lb_model = client.create_model(name=model_name,ontology_id=project.ontology().uid) # use the ontology from a Project

lb_model_run = lb_model.create_model_run(model_version)

-

Use your trained model to generate model predictions and confidence scores on the unlabeled data rows.

-

Upload these unlabeled data rows to the model run.

# Upload unlabeled data rows to the Model Run.

lb_model_run.upsert_data_rows(data_row_ids)

Then, upload model predictions and model metrics on these unlabeled data rows using the SDK.

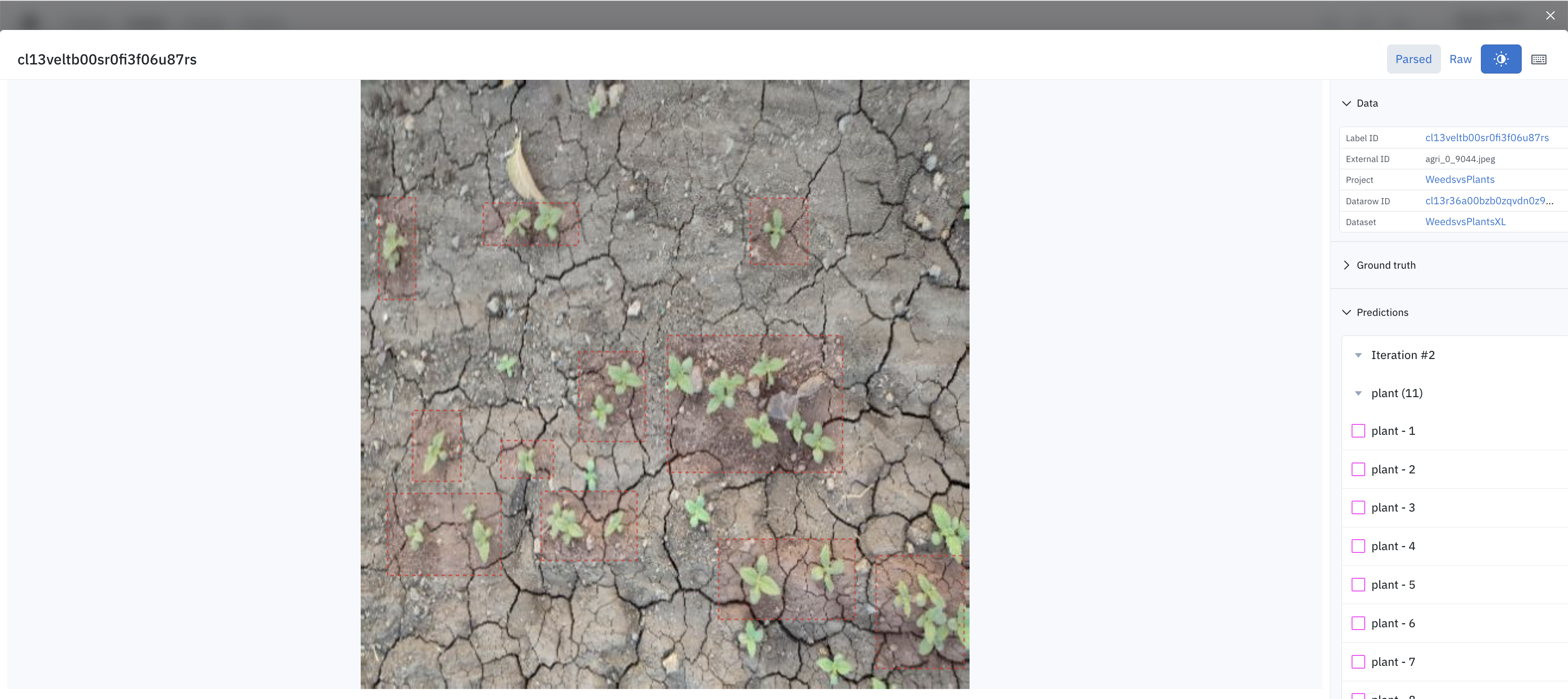

- In the Labelbox App, navigate to the model run that contains these unlabeled data rows, and sort them by decreasing confidence. You can click on any thumbnail in order to open the detailed view and visually inspect predictions (on unlabeled data dows) on which the model is least confident.

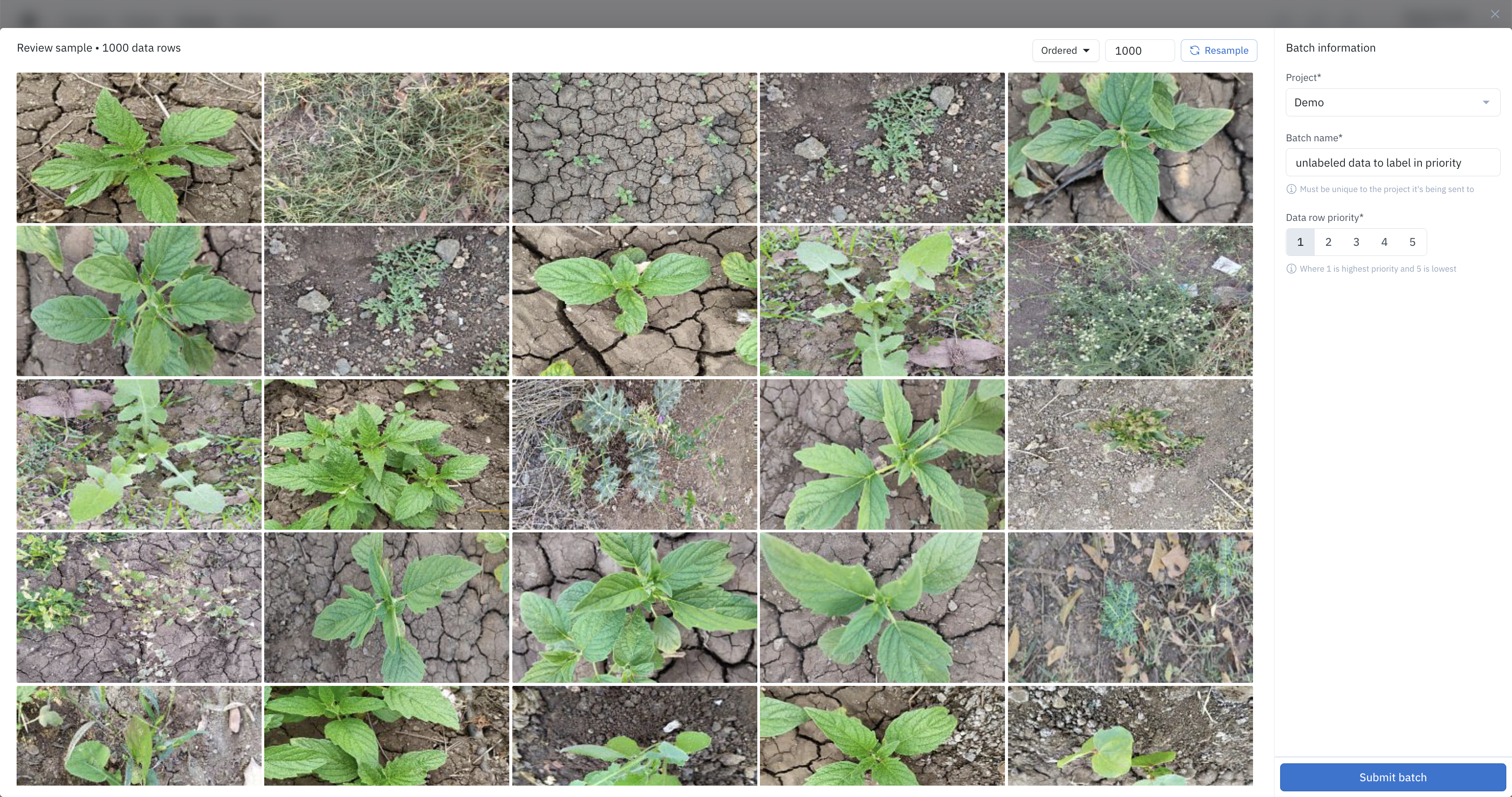

- Now, you can select the top e.g. 1000 unlabeled data rows on which the model is least confident, and open them in the Catalog.

- Click 1000 selected

- Click View in Catalog

The selected data rows should appear at the top of the Catalog.

- Send these data rows for labeling using batches.

- Click Add batch to project.

- Select the destination labeling project.

- Click Submit batch.

Once this additional data has been labeled in Labelbox, you can create a new model run, include these newly labeled Data Rows in your data splits, and retrain your machine-learning model to improve it.

Congratulations, you have done an iteration of uncertainty sampling (active learning)!

Updated 5 months ago